Do not go gentle into that good night / Rage, rage against the dying of the light.

~ Dylan Thomas

Thomas’ villanelle to his dying father is one of the most iconic English poems of the 20thcentury. It is also curiously relevant today, though not in a literal sense. The traditional American zeitgeist is the willingness to step forward fearlessly into the unknown, and in doing so to illuminate it and to dispel infantile terror of the dark. Sadly, the contemporary spirit is one of moribund confidence and the acceptance of stagnation.

There was nothing more indicative of the American spirit than the opening lines of Star Trek: The Original Series: “To boldly go where no man has gone before.” The root of the phrase’s power lies in action, an acceptance that man is capable of seizing control and carrying himself into space. Yet, despite the television series’ timing, the American people were not going boldly into the night, or darkness of space, but rather were starting a retreat that continues into the present.

The retreat is not an apparent one. To all appearances there has been no pell-mell flight from a battlefield with weaponry and protective gear to signal a loss of confidence. To quote Kevin D Williamson, “Nothing happened.” It is the lack of action, the stagnation, that signals a retreat occurred.

In his book Slouching to Gomorrah (1996), the late Robert Bork (1927 – 2012) catalogued the ways he believed America had declined since his youth. Buried among the musings are some anecdotes about Bork’s time at Yale as a young law professor in the late 1960s. In that decade, according to Bork, the university had quietly relaxed its admissions criteria and admitted applicants who would not have qualified under the previous standard. From a position of authority, Bork observed as these young people – mostly men as the problem was concentrated at the undergraduate level and the only women at Yale at the time were graduate students – struggled academically due to lack of adequate preparation. Many of these new students began to flunk as individual faculty refused to dilute their syllabi or grading standards.

As he explained, the stakes at the time were particularly high: in 1968, the time of Bork’s first semester, the draft and deployment to Vietnam would immediately and ruthlessly punish academic failure. Additionally, the students described largely came from middle-America and bore the full weight of their families and local communities’ expectations, causing failure to be particularly humiliating. Although Bork rejected the Marxist and anti-intellectual aspects of the 1968 student protests, he presented a hidden facet to the protestors whom he identified as young people angry at a system they felt had betrayed them and doomed them to failure. The message extracted from a well-intentioned policy change was that these people were ones who couldn’t – they couldn’t keep up with their peers, they couldn’t succeed, and all the indicators of their time pointed toward a truncated future. In short, they didn’t matter; they were not strictly necessary for broader society. Aside from property destruction, the turn toward Marxism and anti-intellectualism was a retreat, a flight from reality. With the rout – entirely self-imposed since the simple solution to the problem was to go to the library and catch up, rather than go burn the books, which is the choice the students made – the United States unknowingly set off on a path of becoming a nation of “cannots.”

To present an analogy, in the training of thoroughbred racehorses, a promising colt is raced against another, less able one in order to build the former’s confidence. The second horse is not only expected to lose, he is rewarded for doing so, but very often at the cost of his spirit and willingness to compete. This analogy is somewhat limited since the Yale student protestors of the 1960s chose the role of second horse themselves, but the result, anger, wanton destruction, and futile rage in the face of their inadequacies, are human indicators of broken spirit and loss of competitive edge. These two traits have, since the 60s, trickled down through all echelons of American society, accompanied by all the symptoms of anger and unnecessary misery. The American people have become the second horse.

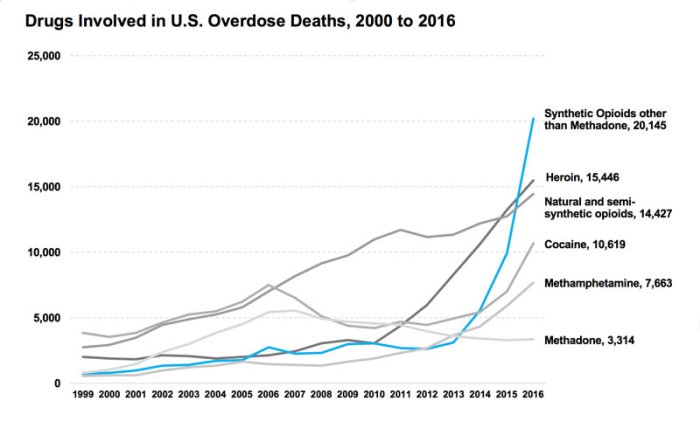

All statistical evidence indicates that the quality of life in America is higher than ever before; we have record low rates of crime, better healthcare, and unparalleled access to consumer goods and luxury technologies. Under circumstances such as these, we should possess an equally high level of national confidence and happiness. But this is not the case. Currently, a Gallup poll from late 2017 shows that, despite the country’s increased prosperity under the new administration, subjective, or perceived, happiness has declined since the 2016 election. In other words, middle America is no happier now than it was pre-November 2016: it is less happy. Combined with the rise of “deaths of despair (official term for deaths from addiction or suicide in middle-aged or younger people)” and the mediatized claims of loss of opportunity due to – O tempora, o mores – technology and the new economy, the story is one of surrender, nothing else.

In the narrative, especially the one surrounding the 2016 election, the story is one of middle-America neglected and in need of special favors and treatment. As part of this picture, its authors and advocates on both sides of the political aisle sneer at the idea of self-determination, in the way of Michael Brendan Dougherty in the piece that Williamson rebutted with his “Nothing happened.” Technology is a particular target of Dougherty’s ire as somehow destructive to a utopic version of American community and family – his most recent article from May 1, 2018, was an apology for using the internet as a work medium – but ignoring the path to financial independence and economic integration that it provides. Hatred of technology and change, a desire to return to the “good old days” is a symptom of the retreat.

Today the logical and economic fallacy, identified by AEI’s Arthur Brooks, of “helping poor people” instead of “needing them” is dominant. Yet, it is a betrayal at all levels of the American ethos. Protectionism, insularity, and above all else, a desire to justify the degraded state of the American worker, pinning the fault on a wide range of people and things, are all signs of the willful betrayal of the American spirit. Although there are individual Americans who are leaders in technology and new industry, the American people are collectively falling behind, and our policy-makers are rewarding us for becoming the second horse through protectionism and populist speeches that reinforce the notion that there is a wronged group of “left behind.” We are no longer going “boldly where no man has gone before;” instead we are docilely being led into the “good night,” all while thinking that we are raging against it. Without a change, the pasture of irrelevance awaits us.