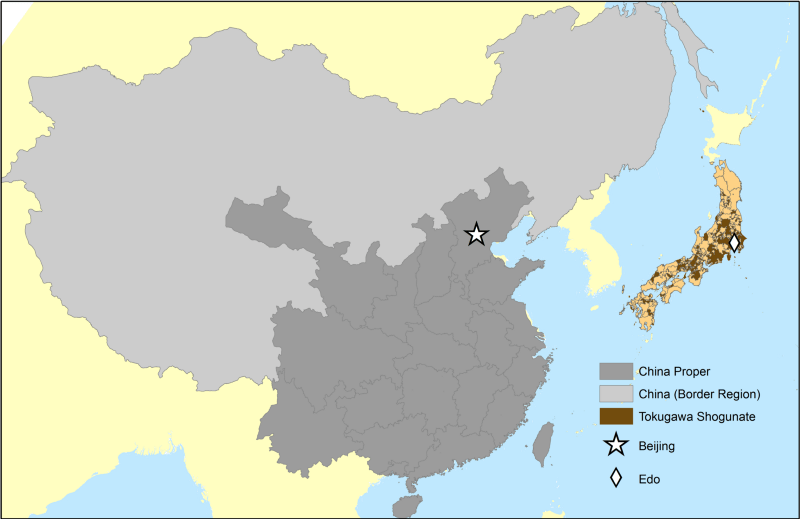

Many 20th century theorists who advocated central planning and control (from Gaetano Mosca to Carl Landauer, and hearkening back to Plato’s Republic) drew a direct analogy between economic control and military command, envisioning a perfectly functioning state in which the citizens mimic the hard work and obedience of soldiers. This analogy did not remain theoretical: the regimes of Mussolini, Hitler, and Lenin all attempted to model economies along military principles. [Note: this is related to William James’ persuasion tactic of “The Moral Equivalent of War” that many leaders have since used to garner public support for their use of government intervention in economic crises from Great Depression to the energy crisis to the 2012 State of the Union, though one matches the organizing methods of war to central planning and the other matches the moral commitment of war to intervention, but I digress.] The underlying argument of the “central economic planning along military principles” was that the actions of citizens would be more efficient and harmonious under direction of a scientific, educated hierarchy with highly centralized decision-making than if they were allowed to do whatever they wanted. Wouldn’t an army, if it did not have rigid hierarchies, discipline, and central decision-making, these theorists argued, completely fall apart and be unable to function coherently? Do we want our economy to be the peacetime equivalent of an undisciplined throng (I’m looking at you, Zulus at Rorke’s Drift) while our enemies gain organizational superiority (the Brits had at Rorke’s Drift)? While economists would probably point out the many problems with the analogy (different sets of goals of the two systems, the principled benefits of individual liberty, etc.), I would like to put these valid concerns aside for a moment and take the question at face value. Do military principles support the idea that individual decision-making is inferior to central control? Historical evidence from Alexander the Great to the US Marine Corps suggests a major counter to this assertion, in the form of Auftragstaktik.

Auftragstaktik

Auftragstaktik was developed as a military doctrine by the Prussians following their losses to Napoleon, when they realized they needed a systematic way to overcome brilliant commanders. The idea that developed, the brainchild of Helmuth von Moltke, was that the traditional use of strict military hierarchy and central strategic control may not be as effective as giving only the general mission-based, strategic goals that truly necessitated central involvement to well-trained officers who were operating on the front, who would then have the flexibility and independence to make tactical decisions without consulting central commanders (or paperwork). Auftragstaktik largely lay dormant during World War I, but literally burst onto the scene as the method of command that allowed (along with the integration of infantry with tanks and other military technology) the swift success of the German blitzkrieg in World War II. This showed a stark difference in outcome between German and Allied command strategies, with the French expecting a defensive war and the Brits adhering faithfully and destructively to the centralized model. The Americans, when they saw that most bold tactical maneuvers happened without or even against orders, and that the commanders other than Patton generally met with slow progress, adopted the Auftragstaktik model. [Notably, this also allowed the Germans greater adaptiveness and ability when their generals died–should I make a bad analogy to Schumpeter’s creative destruction?] These methods may not even seem foreign to modern soldiers or veterans, as it is still actively promoted by the US Marine Corps.

All of this is well known to modern military historians and leaders: John Nelson makes an excellent case for its ongoing utility, and the excellent suggestion has also been made that its principles of decentralization, adaptability, independence, and lack of paperwork would probably be useful in reforming non-military bureaucracy. It has already been used and advocated in business, and its allowance for creativity, innovation, and reactiveness to ongoing complications gives new companies an advantage over ossified and bureaucratic ones (I am reminded of the last chapter of Parkinson’s Law, which roughly states that once an organization has purpose-built rather than adapted buildings it has become useless). However, I want to throw in my two cents by examining pre-Prussian applications of Auftragstaktik, in part to show that the advantages of decentralization are not limited to certain contexts, and in part because they give valuable insight into the impact of social structures on military ability and vice versa.

Historical Examples

Alexander the Great: Alexander was not just given exemplary training by his father, he also inherited an impressive military machine. The Macedonians had been honed by the conquest of neighboring Illyria, Thrace, and Paeonia, and the addition of Thessalian cavalry and Greek allies in the Sacred Wars. However, as a UNC ancient historian found, the most notable innovations of the Macedonians were their new siege technologies (which allowed a swifter war–one could say, a blitzkrieg–compared to earlier invasions of Persia) and their officer corps. This officer corps, made up of the king’s “companions,” was well trained in combined-arms hoplite and cavalry maneuvers, and during multiple portions of his campaign (especially in Anatolia and Bactria) operated as leaders of independent units that could cover a great deal more territory than one army. In set battles, the Macedonians showed a high degree of maneuverability, with oblique advances, effective use of reserves, and well-timed cavalry strikes into gaps in enemy formations, all of which depended on the delegation of tactical decision-making. This contrasted with the Persians, who followed standards into battle without organized ranks and files, and the Greek hoplites, whose phalanx depended mostly on cohesion and group action and therefore lacked flexibility. [Also, fun fact, the Macedonians had the only army in recorded history in which bodies of troops were identified systematically by the name of their leader. This promoted camaraderie and likely indicates that, long-term, the soldiers became used to the tactical independence and decision-making of that individual. Imagine dozens of Rogers’ Rangers.]

The Roman legion: As with any great empire, the Macedonians spread through their military innovations, but then ossified in technique over the next 150 years. When the Romans first faced a major Hellenistic general, Pyrrhus, they had already developed the principles of the system that would defeat the Macedonian army: the legion. In the early Roman legion, two centuries were combined into a maniple, and maniples were grouped into cohorts, allowing for detachment and independent command of differing group sizes. Crucially, centurions maintained discipline and the flexible but coordinated Roman formations, and military tribunes were given tactical control of groups both during and between battles. The flexibility of the Roman maniples was shown at the Battle of Cynoscephalae, in which the Macedonian phalanx–which had frontal superiority through its use of the sarissa and cohesion but little maneuverability–became disorganized on rough ground and was cut to pieces on one flank by the more mobile and individually capable Roman legionaries, This (as well as many battles in the Macedonian and Syrian Wars proved) showed the value of flexibility and individual action in a disciplined force, but where was the Auftragstaktik? At Cynoscephalae, after defeating one flank, the Romans on that flank dispersed to loot the Macedonian camp. In antiquity, this generally resulted in those troops becoming ineffective as a fighting force, and many a battle was lost because of pre-emptive looting. However, in this case, an unnamed tribune–to whom the duty of tactical decisions had been delegated–reorganized these looters and brought them to attack the rear of the other Macedonian flank, which had been winning. This resulted in a crushing victory and contributed to he Roman conquest of Greece. Decentralized control was also a hallmark of Julius Caesar himself, who frequently sent several cohorts on independent campaigns in Gaul under subordinates such as Titus Labienus, allowing him to conquer the much more numerous Gauls through local superiority, lack of Gallic unity, and organization. Also, at the climactic Battle of Alesia, Caesar used small, mobile reserve units with a great deal of tactical independence to hold over 20 km of wooden walls against a huge besieging force.

The Vikings: I do not mean to generalize about Vikings (who could be of many nations–the term just means “raider”) when they do not have a united culture, but in their very diversity of method and origin, they demonstrate the effectiveness of individualism and decentralization. Despite being organized mostly based on ship-crews led by jarls, with central leadership only when won by force or chosen by necessity, Scandinavian longboatmen and warriors exerted their power from Svalbard to Constantinople to Sicily to Iceland and North America from the 8th to 12th centuries. The social organization of Scandinavia may have been the most free (in terms of individual will to do whatever one wants–including, unfortunately, slaughter, but also some surprisingly progressive women’s rights to decisions) in recorded history, and this was on display in the famous invasion of the Great Heathen Army. With as few as 3,500 farmer-raiders and 100 longboats to start, the legendary sons of Ragnar Lothbrok and the Danish invaders, with jarls as the major decision-makers of both strategic and tactical matters for their crews, won a series of impressive battles over 20 years (described in fascinating, if historical-fiction, detail in the wonderful book series and now TV series The Last Kingdom), almost never matching the number of combatants of their opponents, and took over half of England. The terror and military might associated with the Vikings in the memories of Western historians is a product of the completely decentralized, nearly anarchic methods of Scandinavian raiders.

The Mongols: You should be sensing a trend here: cultures that fostered lifelong training and discipline (and expertise in siege engineering, which seems to have correlated with the tactics I describe, as the Macedonians, Romans, and Mongols were each the most advanced siege engineers of their respective eras) tended to have more trust in well-trained subordinates. This brought them great military success and also makes them excellent examples of proto-Auftragstaktik. The Mongols not only had similar mission-oriented commands and tactical independence, but they also had two other aspects of their military that made them highly effective over an enormous territory: their favored style of horse-archer skirmishing gave natural flexibility and their clan organization allowed for many independently-operating forces stretching from Poland to Egypt to Manchuria. The Mongols, like the Romans, demonstrate how a force can have training/discipline without sacrificing the advantages based on tactical independence, and the two should never be mixed up!

The Americans in the French and Indian War and the Revolutionary War: Though this is certainly a more limited example, there were several units that performed far better than others among the Continentals. The aforementioned Rogers’ Rangers operated as a semi-autonomous attachment to regular forces during the French and Indian War, and were known for their mobility, individual experience and ability, and tactical independence in long-range, mission-oriented reconnaissance and ambushes. This use of savvy, experienced woodsman in a semi-autonomous role was so effective that the ranger corps was expanded, and similar tactical independence, decentralized command, and maneuverability were championed by the Green Mountain Boys, the heroes of Ticonderoga. Morgan’s Rifles used similar experience and semi-autonomous flexibility to help win the crucial battles of Saratoga and Cowpens, which allowed the nascent Continental resistance to survive and thrive in the North outside of coastal cities and to capture much of the South, respectively. The forces of Francis Marion also used proto-guerrilla tactics with decentralized command and outperformed the regulars of Horatio Gates. Given the string of unsuccessful set-piece battles fought by General Washington and his more conventional subordinates, the Continentals depended on irregulars and unconventional warfare to survive and gain victories outside of major ports. These victories (especially Saratoga and Cowpens) cut off the British from the interior and forced the British into stationary posts in a few cities–notably Yorktown–where Washington and the French could siege them into submission. This may be comparable to the Spanish and Portuguese in the Peninsular War, but I know less about their organization, so I will leave the connection between Auftragstaktik and early guerrilla warfare to a better informed commenter.

These examples hopefully bolster the empirical support for the idea that military success has often been based, at least in part, on radically decentralizing tactical control, and trusting individual, front-line commanders to make mission-oriented decisions more effectively than a bureaucracy could. There are certainly many more, and feel free to suggest examples in the comments, but these are my favorites and probably the most influential. This evidence should cause a health skepticism toward argument for central control on the basis of the efficiency or effectiveness demonstrated in military central planning. Given the development of new military technologies and methods of campaign (especially guerilla and “lone wolf” attacks, which show a great deal of decentralized decision-making) and the increasing tendency since 2008 to revert toward ideas of central economic planning, we are likely to get a lot of new evidence about both sides of this fascinating analogy.

According to

According to